Machine learning can use human-labeled datasets as training datasets to achieve impressive results. However, hard problems exist in domains with sparse amounts of labeled data, such as in Earth science. Self-supervised learning (SSL) is a method designed to address this challenge. Using clever tricks that range from representation clustering to random transform comparisons, self-supervised learning for computer vision is a growing area of machine learning whose goal is simple: learn meaningful vector representations of images without having human labels associated with each image such that similar images have similar vector representations.

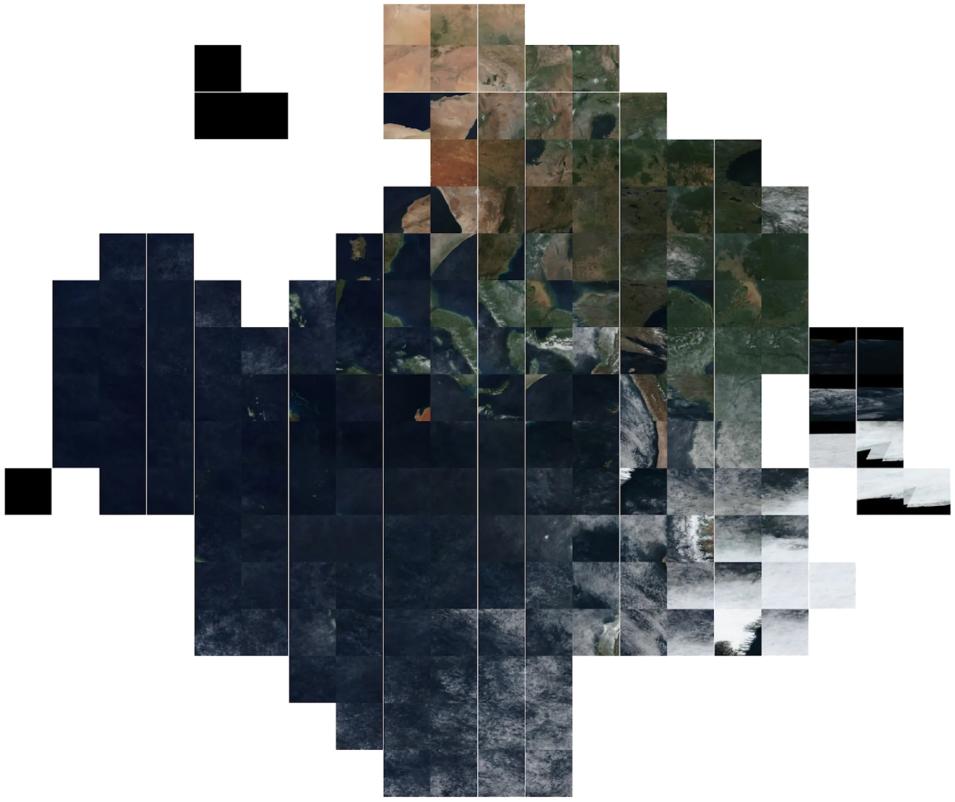

In particular, remote sensing is characterized by a huge amount of images and, depending on the data survey, a reasonable amount of metadata contextualizing the image such as location, time of day, temperature, and wind. However, when a phenomena of interest cannot be found from a metadata search alone, research teams will often spend hundreds of hours conducting visual inspections, combing through data such as on NASA’s Worldview, which covers all 197 million square miles of the Earth’s surface per day across 20 years.

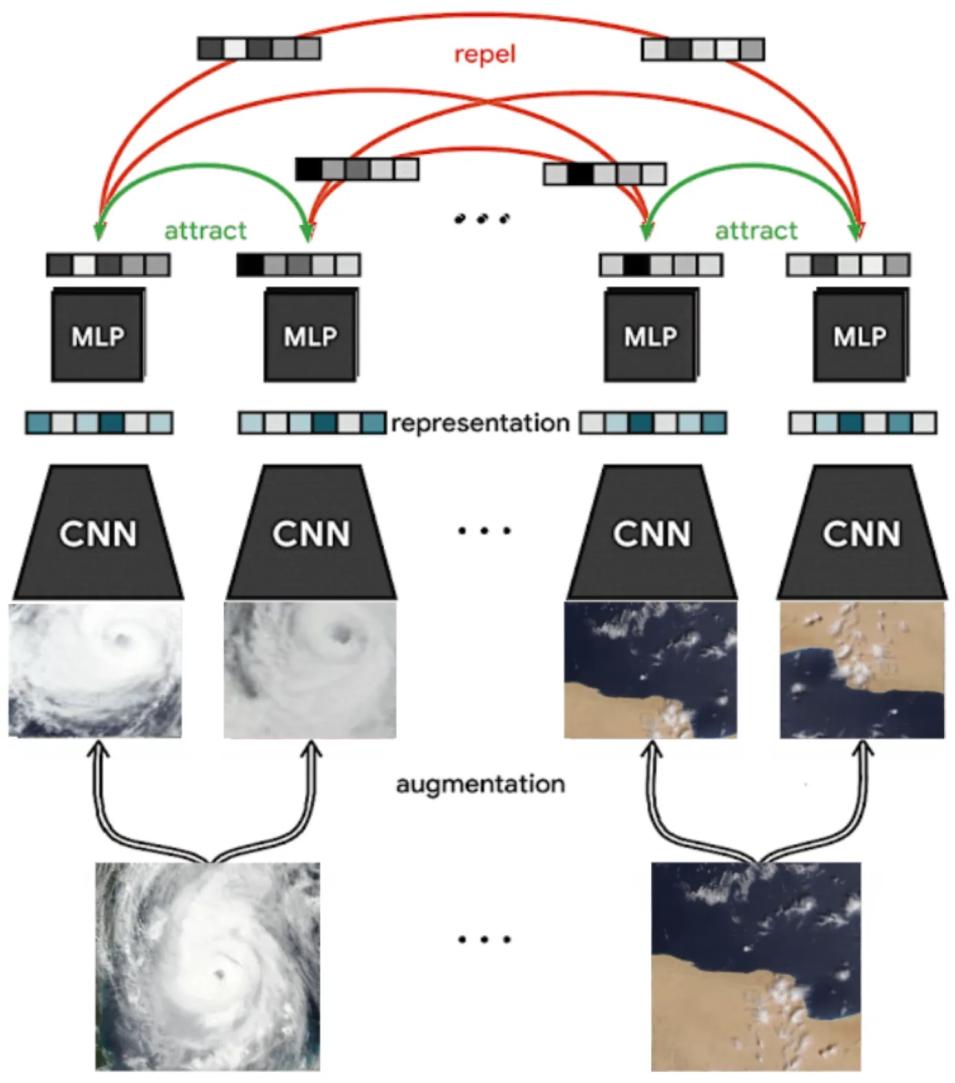

This is the fundamental challenge addressed by the collaboration between IMPACT and the SpaceML initiative. This collaboration produced the Worldview image search pipeline. A key component of that pipeline is the self-supervised learner (SSL) which employs self-supervised learning to build the model store. The SSL model sits on top of an unlabeled pool of data and circumvents the random search process. Leveraging the vector representations generated by the SSL, researchers can provide a single reference image and search for similar images, thus enabling rapid curation of datasets of interest from massive unlabeled datasets.