NASA’s Earth Observing System Data and Information System (EOSDIS) is responsible for one of the world’s largest collections of Earth observing data. Designing and enhancing the systems for providing these data effectively and efficiently to worldwide data users are two primary tasks of EOSDIS System Architect Dr. Christopher Lynnes. Working with his colleagues in NASA’s Earth Science Data and Information System (ESDIS) Project, Dr. Lynnes is preparing for a tremendous growth in the volume of data in the EOSDIS collection and for new ways data users will interact with and use these data.

While the current volume of data in the EOSDIS collection is approximately 34 petabytes (PB), the launch of high-volume data missions such as the Surface Water and Ocean Topography (SWOT) mission and the NASA-Indian Space Research Organisation Synthetic Aperture Radar (NISAR) mission (both of which are scheduled for launch in 2021 or 2022) are expected to increase this data volume to approximately 247 PB by 2025, according to estimates by NASA’s Earth Science Data Systems (ESDS) Program (one petabyte of storage is equivalent to approximately 1.5 million CD-ROM discs). As Dr. Lynnes observes, this Big Data collection will require not only new ways for data users to use and interact with these data, but also new ways of thinking about what data are.

When we talk about data system architecture, what does this mean and what components are we talking about?

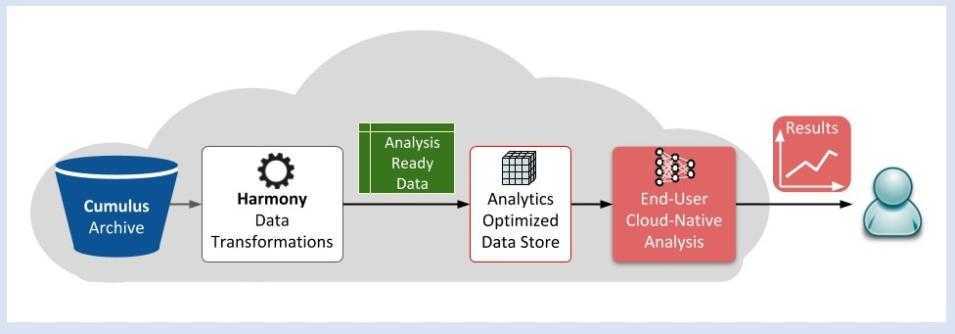

With respect to EOSDIS, and particularly the EOSDIS Distributed Active Archive Centers [DAACs], the term “data system” refers to all of the components that handle the ingest, archive, and distribution or other forms of access for Earth observation data in the EOSDIS collection.

The one thing that all of the DAACs have in common is this aspect of ingest, archive, and distribution. One of the values that EOSDIS adds to this is enterprise-wide subsystems that link all of these DAAC holdings together. These subsystems include the CMR [Common Metadata Repository], a metrics system that [the DAACs] all contribute to, and a search interface for the EOSDIS catalog [Earthdata Search]. We’re also working on a services framework for doing data transformations.

What I do as a system architect, and I do this in partnership with my fellow system architect Katie Baynes, is to make all of these different systems fit together in the most seamless, harmonious way that we can. Katie handles the ingest and archive aspects of our system (what we used to call the “push” side) and I handle mostly the data use side (which is what we used to call the “pull” side). What I try to do is provide the architectural vision for how everything should fit together.

You have been working with NASA Earth observing data and data systems since 1991. How have you seen the EOSDIS data system evolve?

You know, when EOSDIS started there was no World Wide Web. All of our data, or almost all of our data, tended to be stored either off-line on tapes or, in some of the more advanced systems, in what we called a “near-line” system. These would be robotic archives serving either optical disks or tapes. This was the technology almost completely for at least the first several years. It wasn’t until the early-2000s that we started putting most of our data on disk.

Our throughput to the end-users was much lower than it is today. When we were looking to support the SeaWiFS mission [Sea-viewing Wide Field of View Sensor, operational 1997 to 2010], we were concerned about how to support the distribution of 40 gigabytes per day to the end-user community. Back in 1994 that seemed like a real challenge!

It wasn’t really until we got to the early-2000s and were able to put all of our data onto disks that we finally broke through that user throughput barrier that we had until then. This was mostly a factor of disk prices coming down enough that we could afford to purchase enough disks on which we could put all of our instrument data.

Given the expected significant increase in the volume of data in the EOSDIS collection over the next several years, how are you and your team staying ahead of the curve in ensuring that these data will be efficiently and effectively available to worldwide data users?

It’s important to note that this is not the first time we’ve seen this sort of exponential growth. The beginning of NASA’s Earth Observing System [EOS] era [in the late-1990s and early-2000s] had this same surge of growth in the amount of data in the EOSDIS collection. The first couple of years of EOS were difficult as we were trying to get as much data out as we could. Eventually the technology caught up with us and it became much easier to deliver data. Looking at the present, we’re actually fortunate in that I think the technology to deal with these massive volumes of data we’re expecting over the next five to six years—cloud computing technology—actually might be a bit ahead of us.