The Earth science domain is home to highly specialized mathematical models, science data, and scientific principles and Earth Science research depends upon language that is just as specialized. Earth science researchers need to find separately-developed concepts to bring together ideas for solving today’s problems. For these researchers, language is an indispensable tool to make that happen. Whether in data keyword tags, textual metadata, or journal articles, the ability to accurately apply words to research and data and quickly search for those words is essential to advance science.

The popular press has recently reported on the impressive advancements in natural language processing (NLP) tools known as transformers, machine learning (ML) models trained on huge text datasets using millions of parameters (i.e., terms in the modeling equation). One example is GPT-3 which can generate articles and essays which sound as if they were written by humans. However, these generalized language models are not as well-suited for domain-specific tasks, such as those in Earth science.

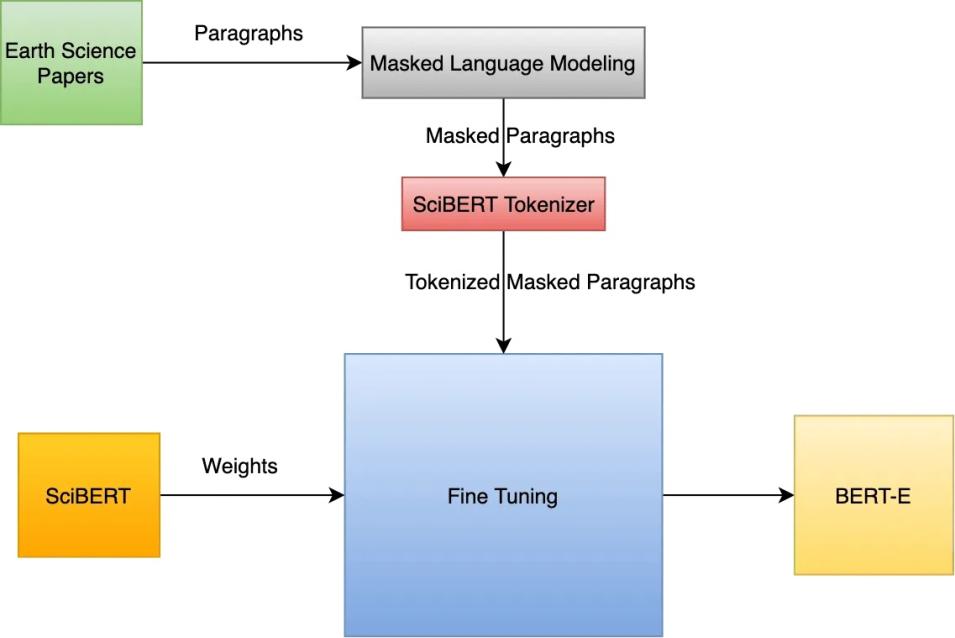

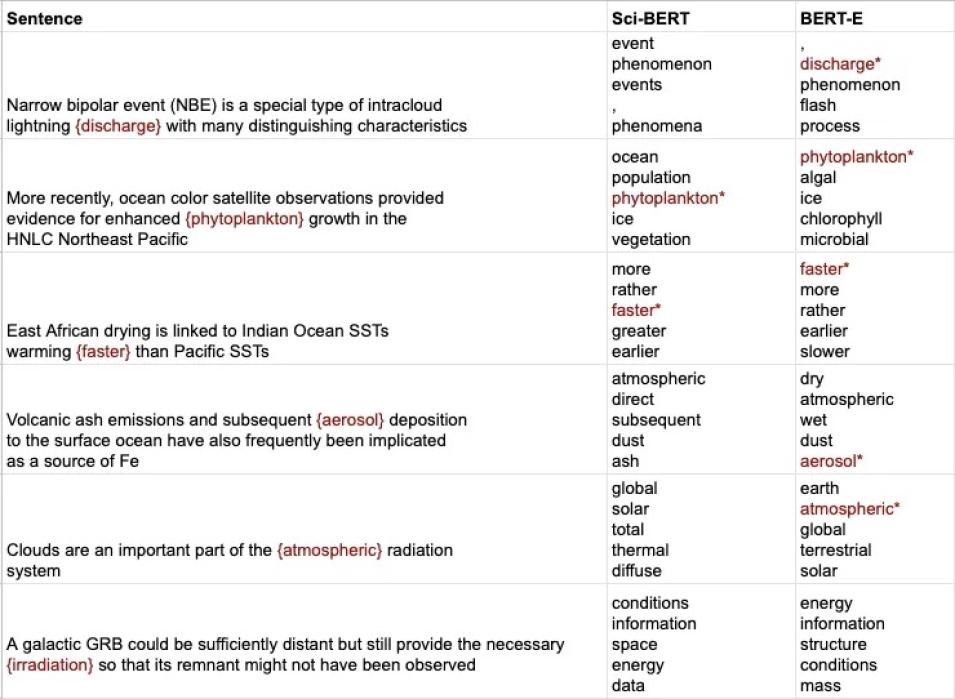

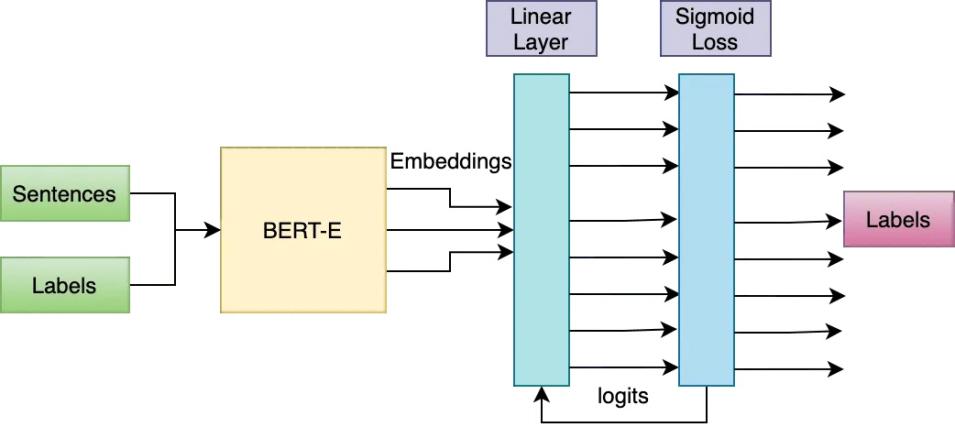

The BERT-E project is an effort by IMPACT’s ML team to develop an industry-standard language model for Earth science based on transformers. Describing transformers in general, IMPACT’s Prasanna Koirala commented:

"I read an essay written by GPT-3, and it was no way any less than what a human would have written or in some ways even better. Ever since then I have been reading and understanding more and more about transformer models."