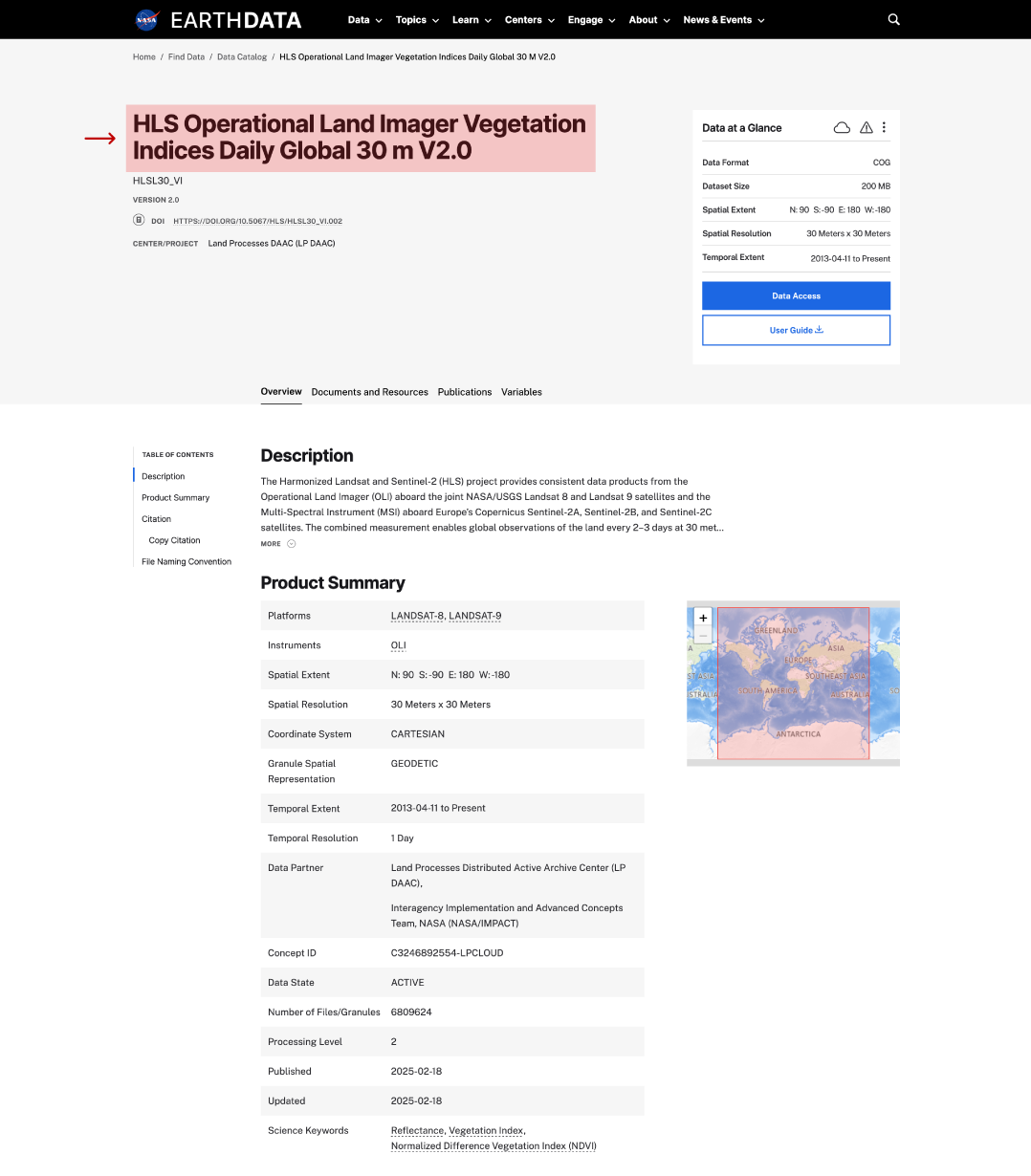

Beta

Products intended to enable users to gain familiarity with the parameters and the data formats.

Provisional

Product was defined to facilitate data exploration and process studies that do not require rigorous validation. These data are partially validated and improvements are continuing; quality may not be optimal since validation and quality assurance are ongoing.

Validated

Products are high quality data that have been fully validated and quality checked, and that are deemed suitable for systematic studies such as climate change, as well as for shorter term, process studies.

Stage 1 Validation: Product accuracy is estimated using a small number of independent measurements obtained from selected locations and time periods and ground-truth/field program efforts.

Stage 2 Validation: Product accuracy is estimated over a significant set of locations and time periods by comparison with reference in situ or other suitable reference data.

Stage 3 Validation: Product accuracy has been assessed. Uncertainties in the product and its associated structure are well quantified from comparison with reference in situ or other suitable reference data.

Stage 4 Validation: Validation results for stage 3 are systematically updated when new product versions are released and as the time-series expands.