In particular, remote sensing is characterized by a huge amount of images and, depending on the data survey, a reasonable amount of metadata contextualizing the image such as location, time of day, temperature, and wind. However, when a phenomenon of interest cannot be found from a metadata search alone, research teams will often spend hundreds of hours conducting visual inspections, combing through imagery using applications such as NASA Worldview, which enables the interactive exploration of all 197 million square miles of Earth’s surface with more than 20 years of daily global satellite imagery.

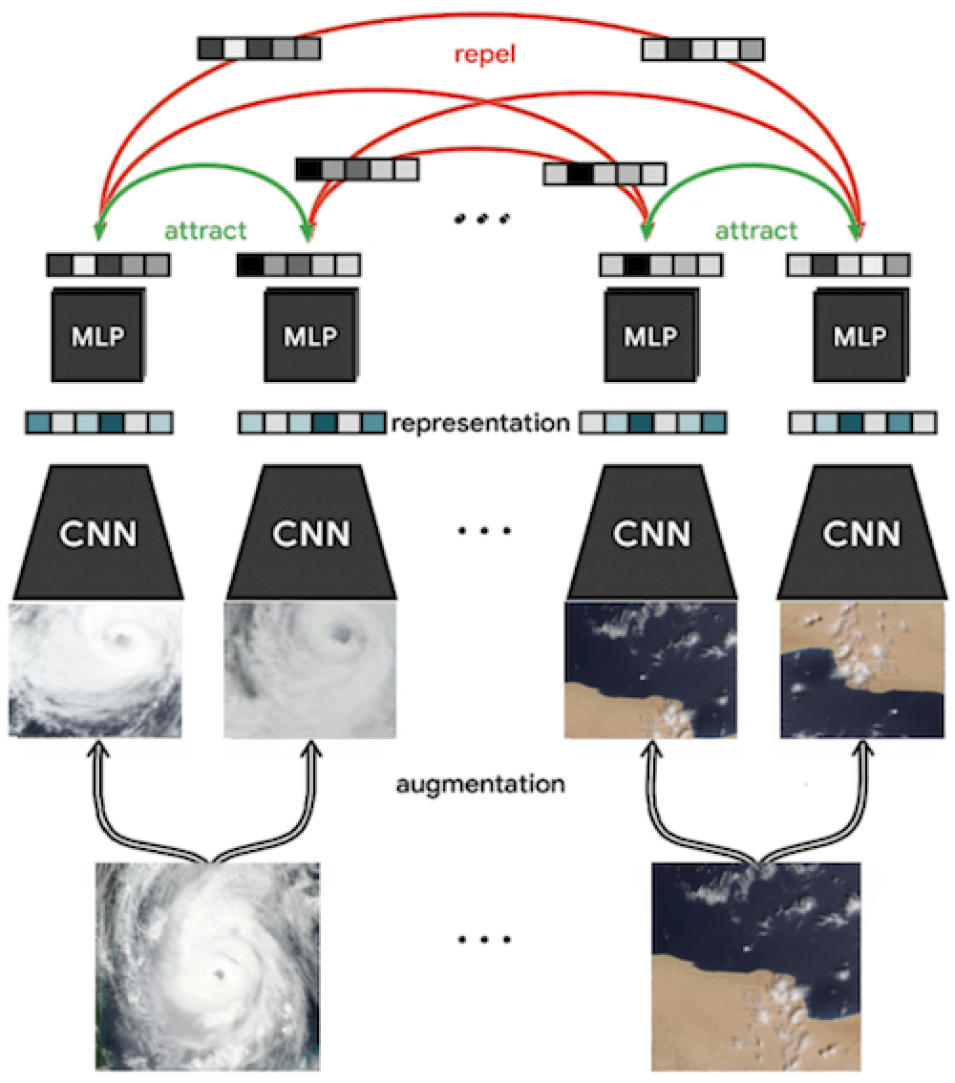

This is the fundamental challenge addressed by a collaboration between IMPACT and the SpaceML initiative. This collaboration produced the Worldview image search pipeline. A key component of the pipeline is the self-supervised learner, which employs SSL to build the model store. The SSL model sits on top of an unlabeled pool of data and circumvents the random search process. Leveraging the vector representations generated by the SSL, researchers can provide a single reference image and search for similar images, thus enabling rapid curation of datasets of interest from massive unlabeled datasets.

The impetus behind this collaboration is to streamline and increase the efficiency of Earth science research. SSL developer, and winner of the Exceptional Contribution Award from the IMPACT team, Rudy Venguswamy explains:

Machine learning has the potential to radically transform how we find out about things happening in our universe to, proverbially, more quickly find needles in our various haystacks. When I started building the SSL as a package, I wanted to build something for scientists in diverse fields, not just machine learning experts.

The SSL tool was released as an open-source package built on PyTorch Lightning. The Worldview image search pipeline uses compatible Graphics Processing Unit (GPU)-based transforms from the NVIDIA Dali package for augmentation and can be trained across multiple GPUs leading to a 5-to-10 times increase in the speed of self-supervised training. New transforms in each epoch are critical to model learning, so improvements to the speed of transforms have a direct impact on training speed.