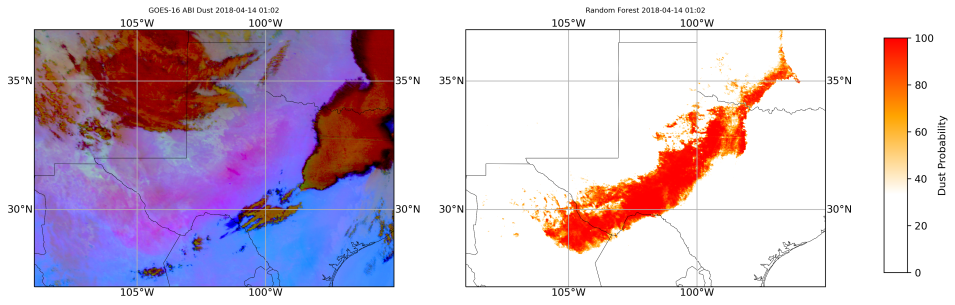

In images where the background was similar in color to dust, the team found that the model correctly labeled about 85% of the dust images and 99% of the non-dust images. The team refined the model to better delineate dust from smoke, further reducing dust detection errors. The result is a machine-based model capable of accurately detecting and tracking ongoing dust events, especially events occurring at night or transitioning from day into night.

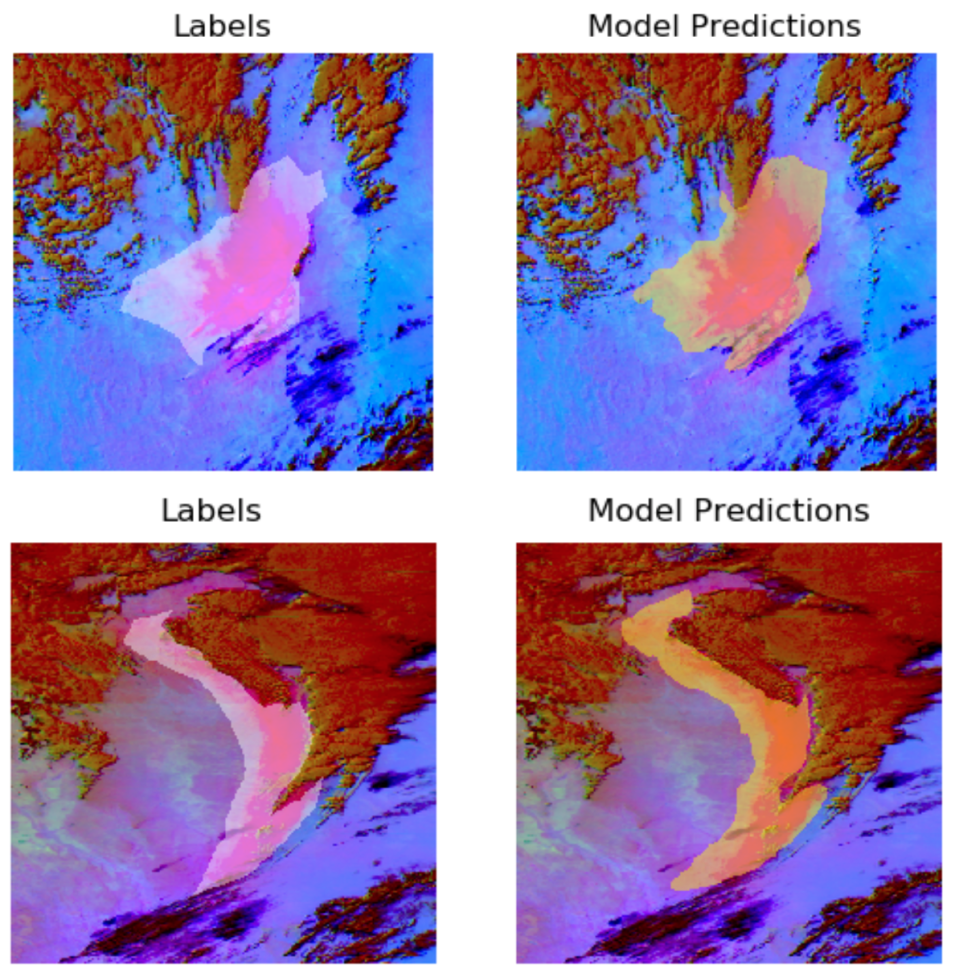

While the simple machine learning approach was able to accurately distinguish dust plume boundaries at night, IMPACT’s application of deep learning was a better approach for addressing the complex image classification the team was tackling. As Muthukumaran points out, there were challenges in developing the deep learning component of this effort.

For one, the IMPACT team did not have many dust cases to use for training the model due to the infrequent and seasonal occurrence of major dust events. As Muthukumaran notes, a good segmentation model covering a large region needs thousands of cases for training the machine model. In this instance, the SPoRT team was able to provide a little over 100 cases to the IMPACT team. Another challenge was trying to create one model that could work across both daytime and nighttime. “Trying to make a single model is a harder problem than having two different models that work on daytime and nighttime separately,” Muthukumaran says.

The value of IMPACT’s DL approach is that it accounts for spatial variability within the image to make predictions with reasonable performance metrics. By developing a physically based approach relying on remote sensing principles, the processes driving the onset and the progression of high impact dust events can be studied more easily. Having model output that better delineates the spatial boundary of a dust storm, especially at night, can provide more lead time for determining when a dust-related hazard might exist and enable forecasters to more accurately designate watch and warning areas for dust storms.

“A lot of [forecasters] have known areas or zones that they refer to as source regions [for dust storms] and they monitor these areas,” says Kevin Fuell, a SPoRT team member who worked with end users to assess the capability of the SPoRT ML model. “Some of these source regions can produce plumes that are fairly small or narrow. If the machine learning probability output is providing a low probability of a dust event, it gives forecasters a heads up that something is starting, as opposed to in the current era where they have to wait until the plume is visible to know that something is starting up.”

To test the viability of the ML model in real-world conditions, SPoRT plans to partner with the National Weather Service to evaluate the utility of the ML model output with operational meteorologists.

The IMPACT team also intends to continue their deep learning efforts on dust detection and will incorporate this work in their Phenomena Detection Portal, which serves as an event catalog for results achieved using DL models.

“As an event catalog, [the Portal] will keep marking events daily and let users view the product using the [Portal] or API [application programming interface],” says Muthukumaran, who notes that dust is a logical addition to the Portal. “We have created a data model and have expertise where we can operationalize this model and run [it] daily on large-scale satellite imagery.”

The application of machine learning to dust is also helping uncover some of the relationships in the imagery. As Berndt observes, machine learning enables a closer examination of the processes involved in the development, lofting, and movement of dust. Along with these scientific applications, being able to better track dust at night also has practical applications including more rapid warnings and better awareness of situations that could be hazardous to air traffic, ground transportation, and human health.

“From a machine learning standpoint, this is a relatively simple model,” says Nicholas Elmer, the SPoRT team member who worked on the model development. “We’re just giving it satellite imagery and there are many other directions we can go. I think these results provide a lot of optimism for future capabilities in this area.”

Read more about the work:

Berndt, E., et al. 2021. A Machine Learning Approach to Objective Identification of Dust in Satellite Imagery. Earth and Space Science, 28 May 2021. doi:10.1029/2021EA001788

Koehl, D. 2020. Building Better Dust Detection. IMPACT Blog, 28 August 2020.

Ramasubramanian, M., et al. 2021. Day time and Nighttime Dust Event Segmentation using Deep Convolutional Neural Networks. SoutheastCon 2021, pp. 1-5. doi:10.1109/SoutheastCon45413.2021.9401859