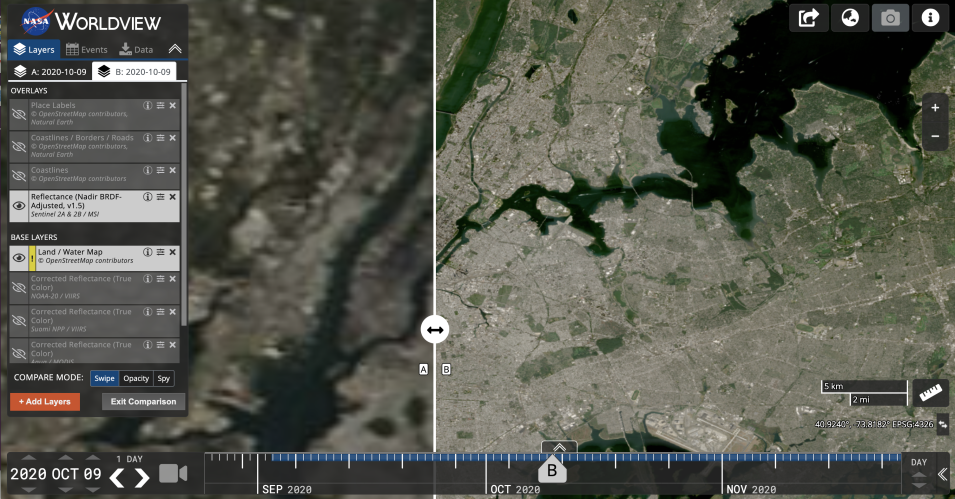

Along with the S30 and L30 HLS data products available through LP DAAC and Earthdata Search, HLS imagery also is available through the EOSDIS Global Imagery Browse Services (GIBS) for interactive exploration using the NASA Worldview data visualization application. A Worldview HLS Tour Story provides information about the imagery and how to work with it in Worldview.

A unique aspect of HLS is that it will be processed, archived, and distributed in the AWS commercial cloud. Data users, though, may not even notice this. “The initial access to HLS will look very traditional,” says Tom Maiersperger at LP DAAC. “Earthdata Search will be the primary search and discovery portal for HLS and accessibility will be through Earthdata Search.”

As Maiersperger notes, the LP DAAC goal is to integrate HLS into their Application for Extracting and Exploring Analysis Ready Samples (AppEEARS) tool. AppEEARS allows users to work with long time-series, transform data in various ways, and reduce data volumes. While currently running on-premises at LP DAAC, the DAAC is working to refactor the AppEEARS code to evolve the tool to run in the commercial cloud. Maiersperger notes that the DAAC’s goal is to have this accomplished as soon as possible after the completion of the HLS historical record back-processing. “We’ve got a ways to go to complete not only the data record, but also for us to be able to provide the best level of service for these data that we can, but we’ll get there,” he says.

As Dr. Freitag at IMPACT observes, hosting HLS in the commercial cloud has significant benefits for data users.

“We’re really trying to take data analysis to the next level where we’re able to provide this large-scale processing without large-scale compute requirements – either downloading a lot of data requiring large amounts of storage or needing to have a lot of memory so you can run through all the files at once,” he says. “For example, if you want to look at all the HLS data for a particular plot of land at the 30-meter resolution provided by HLS, you can do this using your laptop. Everything will be in cloud-optimized GeoTIFF format.”

Of course, the purpose of NASA data is to enable research, and HLS is expected to contribute significantly to explorations into terrestrial processes. “HLS is really a big deal,” says Tom Maiersperger. “For this dataset to have matured and for it to be global, which is unprecedented in earlier versions, just increases the significance of this product.”

A principal HLS application area will be agriculture, including studies into vegetation health; crop development, management, and identification; and drought impacts. HLS data already have been used in the development of a new vegetation seasonal cycle dataset available through LP DAAC: the Multi-Source Land Imaging (MuSLI) Land Surface Phenology (LSP) Yearly North America product (doi:10.5067/Community/MuSLI/MSLSP30NA.001).

Another important benefit of HLS is that Landsat 8 and both Sentinel-2 satellites have equator crossing times of 10 am and 10:30 am local time, respectively. Currently, NASA’s Terra satellite is the only MODIS or VIIRS platform with a morning crossing time (10:30 am local time). With Terra currently drifting in crossing time, HLS will become a primary source for global morning observations at a consistent equator crossing time.

The provisional release of HLS data products represents only the latest achievement of an on-going seven-year effort. Dr. Masek is quick to point out the challenges the team had to overcome to get to this point, including improving Sentinel-2 data processing and geolocation algorithms, developing better ways to correct for differences in view angles and surface reflectance from Landsat and Sentinel-2, and organizing the processing of such a high-volume dataset.

Dr. Masek also goes out of his way to highlight the particular contributions of Dr. Junchang Ju of NASA’s Biospheric Sciences Laboratory and the University of Maryland to the HLS success. “He’s really been the technical lead for this in terms of programming, validating the algorithm performance, catching mistakes, and working with [IMPACT] on implementing the code,” he says. “I want to make sure he gets a lot of credit for the work that’s gone on.”