The science team uses statistical modeling and satellite data to make maps of bird diversity based on the recordings collected by the citizen scientists. These maps are combined with habitat models based on two of NASA’s cutting-edge sensors: Global Ecosystem Dynamics Investigation (GEDI), a space-based LIDAR on the International Space Station, and a future satellite imaging spectrometer, currently simulated, the Hyperspectral Infrared Imager (HyspIRI). Data collected from these sensors is used to understand species distribution and factors related to conserving bird diversity.

The Soundscapes to Landscapes project is a partnership among the Center for Interdisciplinary Geospatial Analysis (CIGA) at Sonoma State University, Point Blue Conservation Science, Audubon California, Pepperwood Preserve, Sonoma County Agricultural Preservation and Open Space District, Northern Arizona University, University of California Merced, and the University of Edinburgh.

Update October 2019

The spring 2019 field campaign focused on surveying publicly owned properties with new research permits for Sonoma Regional Parks, US Fish and Wildlife Service, and California State Parks. The project also obtained access to several larger private properties in the west area of Sonoma County and a large block of forest in the remote northwest area of the county.

Some volunteers participated through a “mail deploy” approach, in which AudioMoth recorders and instructions were mailed to landowners to deploy the unit on their own property. Thirty-two citizen scientists participated in this activity. Collectively, volunteers spent 1,163 hours in the field.

Citizen scientists also helped identify, tag, and validate bird calls in recordings in organized “bird blitzes” or at home. These bird calls will be used to sort through soundscape recordings and accurately identify target bird species at survey sites. The University of California, Merced (UMD) team also developed a prototype deep learning framework to identify bird species in noisy audio recordings. Since the field audio data were still being acquired, and bird calls validated for training, team member Shrishail Baligar at UMD downloaded a large dataset of bird call audio files from the xeno-canto website in order to train and evaluate the prototype deep learning methods. The initial results were promising.

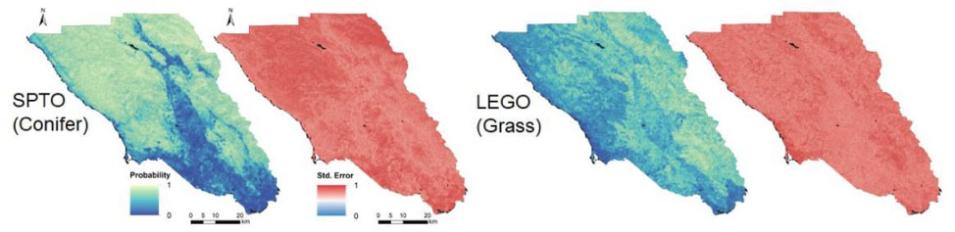

The in situ bird diversity data from field sites, as measured by bioacoustics, will be used with remote sensing, climate and other predictor variables in species distribution models (SDM) that estimate the probability of occupancy for a given bird species. During year one, the Co-I’s greatly improved the SDM code from the Prototype phase to perform multiple machine learning models on a high-performance cluster (HPC) at collaborator Northern Arizona University. In an SDM analysis with simulated GEDI and existing bird diversity data (from eBird, Breeding Bird Survey), the team found that canopy structure as measured by GEDI was the second most important group of predictor variables, after climate predictors. Canopy structure was particularly important in predicting the occurrence of conifer forest birds.