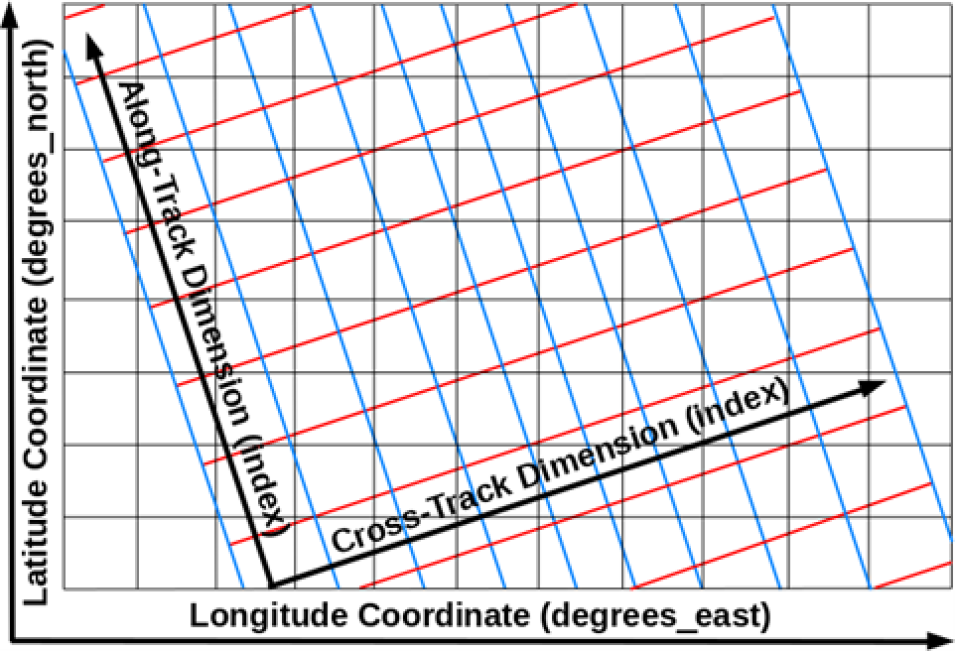

Data structures are containers for geolocation and science data. Guidance regarding swath structures in netCDF formats is provided in Encoding of Swath Data in the CF Convention [30]. The ESDSWG Dataset Interoperability Working Group (DIWG) has provided guidance regarding grid structures in netCDF-4 in [24] (Rec. 2.8-2.12) and [31] (Rec. 3.6). NOAA has provided a set of netCDF format templates for various types of data products [32] although these should be considered as informative, not normative. Data producers can obtain guidance and samples from their DAAC. Earthdata Search [33] can also be used to acquire a variety of data in different formats and structures.

3.1.2 GeoTIFF

The Georeferenced Tagged Image File Format (GeoTIFF, *.tif) format is a georeferenced raster image that uses the public domain Tagged Image File Format (TIFF) [34], and is used extensively in the Geographic Information System (GIS) [35] and Open Geospatial Consortium (OGC) communities [36]. Although the types of metadata that can be added to GeoTIFF files are much more limited than with netCDF-4 and HDF5, the OGC GeoTIFF Standards Working Group is planning to work on reference system metadata in the near term. Both data producers and users find this file format easy to visualize and analyze, and so it has many uses in Earth Science. OGC GeoTIFF Standard, Version 1.1 is an EOSDIS recommended format [37].

Recently, a cloud-optimized profile for GeoTIFF (called COG) has been developed to make retrieval of GeoTIFF data from Web Object Storage (object storage accessible through https) more efficient [38] [39]. Also, OGC has published a standard for COG [40] [41]. See [37] for a discussion of SWAL of GeoTIFF. DIWG has recommended that data producers include only one variable per GeoTIFF file [23].

3.2 Recognized Formats

In some cases, where the dominant user communities for a given data product have historically used other formats, it may be more appropriate to continue to use those formats instead of the formats recommended above. If such formats are not already on ESCO’s list of approved data formats, they can be submitted to ESCO for review and approval following the Request for Comments instructions [42].

3.2.1 Text Formats

NASA DAACs archive numerous datasets that are in “plain text”, typically encoded using the American Standard Code for Information Interchange (ASCII). Unicode, which is a superset of ASCII, is used to represent a much wider range of characters, including those used for languages other than English. The list of ESCO’s approved standards using ASCII includes: International Consortium for Atmospheric Research on Transport and Transformation (ICARTT), NASA Aerogeophysics ASCII File Format Convention, SeaBASS Data File Format, and YAML Encoding ASCII Format for GRACE/GRACE-FO Mission Data. Recommendations on the use of ASCII formats are presented in the ASCII File Format Guidelines for Earth Science Data [43].

It should be noted that the comma-separated value (CSV) format is also a plain text format, as are Unidata’s Common Data Language (CDL), JavaScript Object Notation (JSON), and markup languages such as HTML, XML, and KML. The main advantage of encoding data in ASCII is that the contents are human readable, searchable, and editable. The main disadvantage is that file size, if not compressed, will be much larger than if the equivalent data were stored in a well-structured binary format such as netCDF-4, HDF5, or GeoTIFF. Another disadvantage of ASCII is that print-read consistency can be lost. Different programs reading a file could convert numerical values expressed in ASCII to slightly different floating-point numbers. This could complicate certain aspects of software engineering such as unit tests.

3.2.2 ICARTT

The ICARTT format [44] arose from a consensus established across the atmospheric chemistry community for visualization, exchange, and storage of aircraft instrument observations. The format is text-based and composed of a metadata section (e.g., data source, uncertainties, contact information, and brief overview of measurement technique) and a data section. Although it was primarily designed for airborne data, the format is also used for non-airborne field campaigns.

The simplicity of the ICARTT format allows files to be created and read with a single subprogram for multiple types of collection instruments and can assure interoperability between diverse user communities. Since typical ICARTT files are relatively small, the inefficiency of ASCII for storage is not a serious concern. See [44] for a discussion of SWAL of the ICARTT format.

3.2.3 Vector Data and Shapefiles

The OGC GeoPackage is a platform-independent and standards-based data format for geographic information systems implemented as an SQLite database container (*.gpkg) [45]. It can store vector features, tile matrix sets of imagery and raster maps at various scales, and extensions in a single file.

OGC has standardized the Keyhole Markup Language (KML, *.kml) format that was created by Keyhole, Inc. and is based on the eXtensible Markup Language (XML) [46]. The format delivers browse-level data (e.g., images) and small amounts of vector data (e.g., sensor paths, region boundaries, point locations), but it is voluminous for storing large data arrays. KML supports only geographic projection (i.e., evenly spaced longitude and latitude values), which can limit its usability. The format combines cartography with data geometry in a single file, which allows users flexibility to encode data and metadata in several different ways. However, this is a disadvantage to tool development and limits the ability of KML to serve as a long-term data format for archive. OpenGIS KML is an approved standard for use in EOSDIS. As noted in the recommendation, KML is primarily suited as a publishing format for the delivery of end-user visualization experiences. There are significant limitations to KML as a format for the delivery of data as an interchange format [47].

A Shapefile is a vector format for storing geometric location and attribute information of geographic features, and requires a minimum of three files to operate: the main file that stores the feature geometry (*.shp), the index file that stores the index of the feature geometry (*.shx), and the dBASE table that stores the attribute information of features (*.dbf) [48] [49]. Geographic features can be represented by points, lines, or polygons (areas). Geometries also support third and fourth dimensions as Z and M coordinates, for elevation and measure, respectively. Each of the component files is limited to 2 gigabytes. Shapefiles have several limitations that impact storage of scientific data. "For example, they cannot store null values, they round up numbers, they have poor support for Unicode character strings, they do not allow field names longer than 10 characters, and they cannot store both a date and time in a field” [50]. Additional limitations are listed in the cited article.

GeoJSON [51] is a format for encoding a variety of geographic features like Point, LineString, and Polygon. It is based on JSON and uses several types of JSON objects to represent these features, their properties, and their spatial extents.

3.2.4 HDF5

HDF5 is a widely-used data format designed to store and organize large amounts of data. NetCDF-4 (Section 3.1.1) and HDF-EOS5 (Section 3.2.4) are both built on HDF5. NetCDF-4 is the recommended format for new Earth Science data products as this format is generally more easily utilized by existing tools and services. However, as detailed in Section 3.3.3, there are emerging strategies for enhancing HDF5 for improved S3 read access that represent important usage and performance considerations for Earth Science data distributed via the NASA Earthdata Cloud.

3.2.5 HDF-EOS5

HDF-EOS5 was a specially developed data format for the Earth Observing System based on HDF5, which has been widely used for NASA Earth Science data products and includes data structures specifically designed for Earth Science data.

HDF-EOS5 employs the HDF-EOS data model [52] [53], which remains valuable for developing Earth Science data products. The Science Data Production (SDP) Toolkit [52] and HDF-EOS5 library provide the API for creating HDF-EOS5 files that are compliant with the EOS data model.

In choosing between HDF-EOS5 and netCDF-4 with CF conventions, netCDF-4/CF is recommended over HDF-EOS5 due to the much larger set of tools supporting the format.

3.2.6 Legacy Formats

Legacy formats (e.g., netCDF-3, HDF4, HDF-EOS2, and ASCII) are those used in early EOS missions, though some missions continue to produce data products in these formats. Development of new data products or new versions of old products from early missions may continue to use the legacy format, but product developers are strongly encouraged to transition data to the netCDF-4 format for improved interoperability with data from recent missions. Legacy formats are recommended for use only in cases where the user community provides strong evidence that research will be hampered if the data formats are changed.

3.2.7 Other Formats

Some data products are provided by data producers in formats that are not endorsed by ESCO. These can include ASCII files with no header, simple binary files that are not self-describing, comma- separated value (CSV) files, proprietary instrument files, etc. Producers of such data are not necessarily NASA-funded, such as some participants in field campaigns; thus, they are not under any obligation to conform to NASA’s format requirements or could lack adequate resources to do so.

There are other formats that are currently evolving in the community, stemming from developments in cloud computing, Big Data, and Analysis-Ready Data (ARD) [54] that are discussed in Section 3.3.

3.3 Cloud-Optimized Formats and Services

Following the ESDS Program’s strategic vision to develop and operate multiple components of NASA's EOSDIS in a commercial cloud environment, the ESDIS Project implemented the Earthdata Cloud architecture that went operational in July 2019 using Amazon Web Services (AWS) [55]. Key EOSDIS services, such as CMR and Earthdata Search, were deployed within it. Additionally, the DAACs are moving the data archives they manage into the cloud.

The AWS Simple Storage Service (S3) offers scalable solutions to data storage and on-demand/scalable cloud computing, but also presents new challenges for designing data access, data containers, and tracking data provenance. AWS S3 is a popular example of object-based cloud storage, but the general characteristics noted in this document are applicable for object-based cloud storage from other providers as well. Cloud (object) storage is typically accessed through HTTP “range-get” requests in contrast to traditional disk reads, and so packaging the data into optimal independent “chunks” (see Section 5) is important for optimizing access and computation.

Furthermore, the object store architecture allows data to be distributed across multiple physical devices, in contrast to local contiguous storage for traditional data archives, with the data content organization often described in byte location metadata (either internally or in external “sidecar” files). Thus, many cloud storage “formats” are better characterized as (data) content organization schemes (see Appendix B), defined as any means for enhancing the addressing and access of elements contained in a digital object in the cloud.

Cloud “optimized” data containers or content organization schemes that are being developed to meet the emerging cloud compute needs and requirements include Cloud-Optimized GeoTIFF (COG), Zarr (for-the-cloud versions of HDF5 and netCDF-4 [including NCZarr]), and cloud-optimized point-cloud data formats (see also [56] for additional background). COG, Zarr, HDF5, and netCDF-4 (see Sections 3.3.1, 3.3.2, and 3.3.3, respectively) continue to remain preferred formats for raster data, while lidar and point-based irregular data are more appropriate for point cloud formats (see Section 3.3.4). These cloud storage optimizations, although described in well-defined specifications, are still advancing and growing in maturity with regard to their use and adaptations in cloud-based workflows, third party software support, and web services (e.g., OPeNDAP, THREDDS, OGC WCPS). However, none of these formats require in-cloud processing for scientific analysis and can work with local computer operating systems and libraries without issue once the data have been downloaded. Analysis-Ready, Cloud-Optimized (ARCO) data, where the cloud data has been prepared with complete self-describing metadata following a standard or best practice, including the necessary quality and provenance information, and well-defined spatial and temporal coordinate systems and variables, offer a significant advantage for reproducible science, computation optimization, and cost reduction.

Data producers should carefully optimize their data products for partial data reads (via HTTP or direct S3 access) to make them as cloud friendly as possible. This requires organizing the data into appropriate producer-defined chunk sizes to facilitate access. The best guidance thus far is that S3 reads are optimized in the 8-16 megabyte (MB) range [57] presenting a reasonable range of chunk sizes. The Pangeo Project [58] reported chunk sizes ranging from 10-200 MB when reading Zarr data stored in the cloud using Dask [59] and the desired chunking often depends on the likely access pattern (e.g., chunking in small Regions of Interest (ROIs) for long time series data requests vs. chunking in larger ROI slices for large spatial requests over a smaller temporal range). However, on the other end of the spectrum, chunks that are too small, on the order of a few megabytes, typically impede read performance in the cloud. Data producers are advised to consult with their assigned DAAC regarding the specific approaches to their products including the chunking implementation.

3.3.1 Cloud-Optimized GeoTIFF

The COG data format builds on the established GeoTIFF format by adding features needed to optimize data use in a cloud-based environment [39] [40]. The primary addition is that internal tiling (i.e., chunking) for each layer is enabled. The tiling features enable data reads to access only the information of interest without reading the whole file. Since COG is compatible with the legacy GeoTIFF format it can be accessed using existing software (e.g., GIS software).

3.3.2 Zarr

Zarr is an emerging open-source format that stresses efficient storage of multidimensional array data in the cloud and fast parallel input/output (I/O) computations [60] [61]. Its data model supports compressed and chunked N-dimensional data arrays, inspired in part by the HDF5 and netCDF-4 data models. Its consolidated metadata can include a subset of the CF metadata conventions that are familiar to existing users of netCDF-4 and HDF5 files and allow for many useful time-series and transformation operations through third party libraries such as xarray [62]. Zarr stores chunks of data as separate objects in cloud storage with an external consolidated JSON metadata file containing all the locations to these data chunks. A Zarr software reader (e.g., using xarray in Python) only needs a single read of the contents of the consolidated metadata file (i.e., the sidecar file) to determine exactly where in the Zarr data store to locate data of interest, substantially reducing file I/O overhead and improving efficiency for parallel CPU access.

3.3.3 NetCDF-4 and HDF5 in the Cloud

Much of NASA Earth Science data has been historically stored in netCDF-4 and HDF5 data files. Besides maintaining continuity to legacy data products, there are other important data life cycle reasons to continue to use these formats, including data packaging, data integrity, and self-describing characteristics. The challenge is how to best optimize the individual files for cloud storage and access. Here, data chunking plays a leading role in this optimization with the general guidelines on this subject found in the introduction to Section 3.3. It has been demonstrated that it is possible to translate the annotated Dataset Metadata Response (DMR++) [63] sidecar files that are generated for many of NASA’s HDF5 files that have been migrated to the Earthdata Cloud into a JSON file with the key/value pairs that the Zarr library needs [64], making the HDF5 directly readable as Zarr stores.

Further cloud optimization of HDF5 files, specifically, requires enhancing the internal metadata HDF structure via the “Paged Aggregation” feature at the time of file creation (or modification via h5repack), so that the internal file metadata (i.e., not the global metadata) and data are organized into a single or a few pages of specified size (usually on the order of Mebibytes) to improve read file I/O. The exact size is important for parallel I/O operations in the cloud, and other HDF libraries that can cache the pages, further improving performance.

NCZarr is an extension and mapping of the netCDF-enhanced data model to a variant of the Zarr storage model (see Section 3.3.2).

Additional discussion of cloud optimization of netCDF-4 and HDF5 files via data transformation services is provided in Section 3.3.5.

3.3.4 Point Cloud Formats

A point cloud is commonly defined as a 3D representation of the external surfaces of objects within some field of view, with each point having a set of X, Y and Z coordinates. Point cloud data have traditionally been associated with lidar scanners such as on aircraft; in addition, in situ sensors such as those mounted on ocean gliders and airborne platforms can also be considered as point cloud data sources. The key characteristic is that these instruments produce a large number of observations that are irregularly distributed and thus are “clouds” of points.

There are many emerging formats in this evolving genre [65]. Some noteworthy formats include Cloud-Optimized Point Cloud (COPC), Entwine Point Tiles (EPT) and Parquet. COPC builds on existing point cloud formats popular in the lidar community known as LAS/LAZ (LASer file format/LAS compressed file format) and specifications from EPT. EPT itself is an open-source content organization scheme and library for point cloud data that is completely lossless and uses octree- based storage format and contains metadata in JSON. Parquet is a column-based data storage format that is suitable for tabular style data (including point cloud and in situ data). Its design lends itself to efficient queries and data access in the cloud. GeoParquet is an extension that adds interoperable geospatial types such as Point, Line and Polygon to Parquet [66].

3.3.5 Additional Data Transformation and Access Services

For data analysis in the cloud, it is often preferred to optimize data for parallel I/O and multi- dimensional analysis. This is where Zarr excels and a number of transformation services from netCDF-4 and HDF5 files to Zarr have emerged to support this need. Traditional file-level data access and subsetting via the OPeNDAP web service has also evolved to meet the needs of cloud storage.

Many of these tools enable Zarr-like parallel and chunked access capabilities to be applied onto traditional netCDF-4 and HDF5 files in AWS S3. While these services are not critical for producing data products, it is important for data producers to be aware of their use by Earth Science data consumers.

3.3.5.1 Harmony Services

The name “Harmony” refers to a set of evolving open-source, enterprise-level transformation services for data residing in the NASA Earthdata Cloud [67]. These services are accessed via a well- defined and open API, and include services for data conversion and subsetting.

Harmony-netcdf-to-zarr [68] is a service to transform netCDF-4 files to Zarr cloud storage on the fly. It aggregates individual input files into a single Zarr output that can be read using xarray calls in Python. As additional files become available, this service must be rerun to account for the new data.

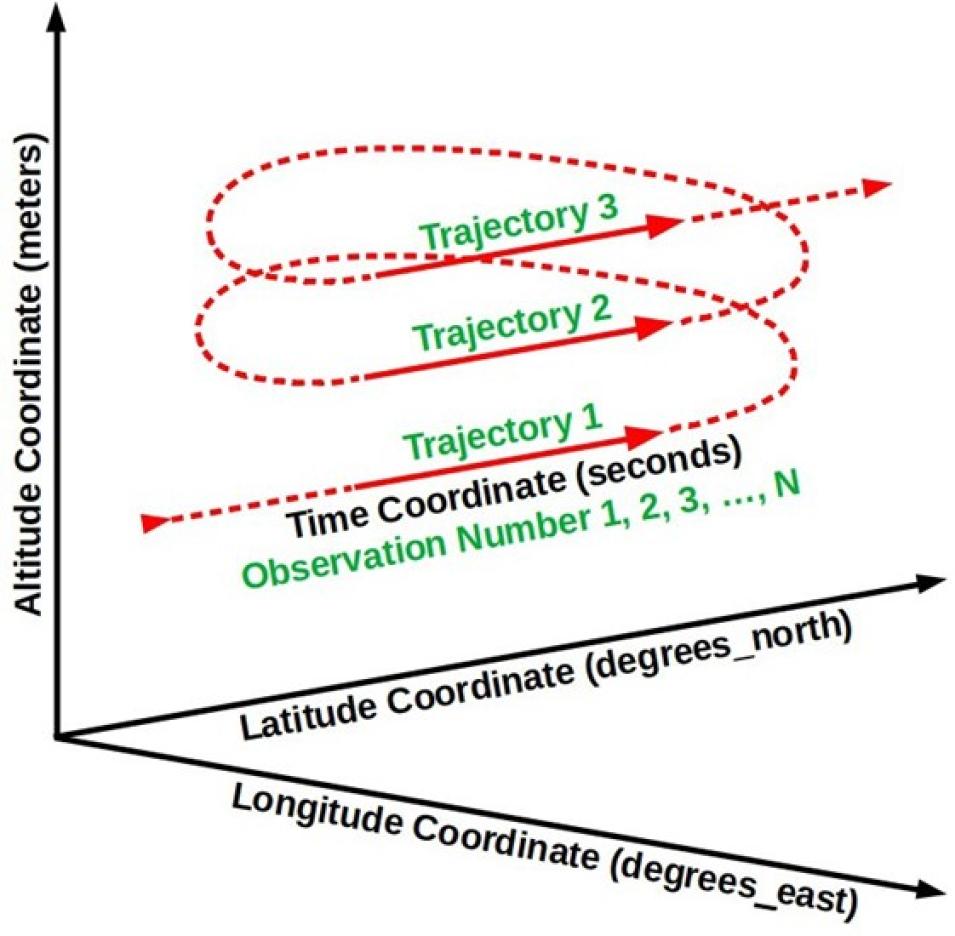

Subsetting requests for trajectory (1D) and along track/across track data in netCDF and HDF files are executed using the harmony L2-subsetter service while geographically gridded Level 3 or 4 data use the Harmony OPeNDAP SubSetter service (HOSS). The Harmony-Geospatial Data Abstraction Library (GDAL)-adapter service supports reprojection.

3.3.5.2 Kerchunk

Kerchunk is a Python library to generate a “virtual” Zarr store from individual netCDF-4 and HDF5 files by creating an external metadata JSON sidecar file that contains all the locations to the individual input data chunks [69]. The “virtual” Zarr store can be read using xarray and the original netCDF-4 and HDF5 files remain unmodified in content and location. As Kerchunk leverages the fsspec library for storage backend access, it enables end users to more efficiently access parallel chunks from cloud-based S3, as well as other remote access such as data over Secure Shell (SSH) or Server Message Block (SMB).

3.3.5.3 OPeNDAP in the Cloud

The OPeNDAP Hyrax server is optimized to address the contents of netCDF-4 and HDF5 files stored in the cloud using information in the annotated DMR++ [63] sidecar file. The DMR++ file for a specific data file encodes chunk locations and byte offsets so access and parallel reads to specific parts of the file is optimized.

Back to Table of Contents

4.1 Overview

Metadata are information about data. Metadata could be included in a data file and/or could be external to the data file. In the latter case there should be a clear connection between the metadata and the data file. As with the other aspects of data product development, it is helpful to consider the purpose of metadata in the context of how users will interact with the data and how metadata are associated with (i.e., structurally linked to) the data.

Metadata are essential for data management: they describe where and how data are produced, stored, and retrieved. Metadata are also essential for data search/discovery and interpretation, including facilitating the users’ understanding of data quality. A data producer has a responsibility to provide adequate metadata describing the data product at both the product level and the file level. The DAAC that archives the data product is responsible for maintaining the product-level metadata (known as collection metadata in the CMR [17]), which is a high-performance, high-quality, continuously-evolving metadata system that catalogs all data and service metadata records for EOSDIS. These metadata records are registered, modified, discovered, and accessed through programmatic interfaces leveraging standard protocols and APIs.

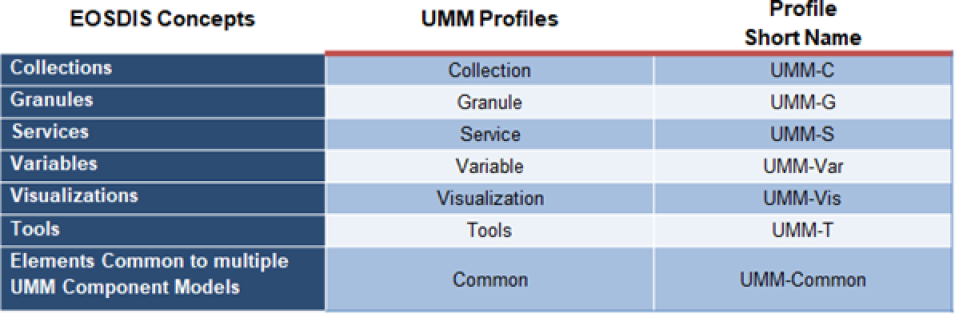

The ESDIS Project employs tools to interact with CMR based on the Unified Metadata Model (UMM). Profiles have been defined within the UMM based on their function or content description, such as Collection, Service, Variable, or Tool as shown in Table 1.

Table 1.