Introduction

“We are called to be architects of the future, not its victims.” —R. Buckminster Fuller

Emerging new technologies are always feared by some. In 1474, priest Filippo de Strata worried that the “brothel of the printing press” would dilute the quality of information made available to readers. In the mid 1800s, people were concerned the railway would destroy community life. In 1969, Neil Postman and others worried that low quality programming on television would divert viewers from the information that mattered. Yet, despite the fears, and fearmongers, these technologies have emerged to fundamentally change our lives and our world.

We are experiencing the emergence of another transformative new technology: large language models, or LLMs. The most familiar application of LLMs is ChatGPT, powered by the Generative Pre-trained Transformer (GPT) family of language models, but there are other LLMs in development by major tech corporations. Applications like ChatGPT allow a user to ask questions and receive answers in a conversational way.

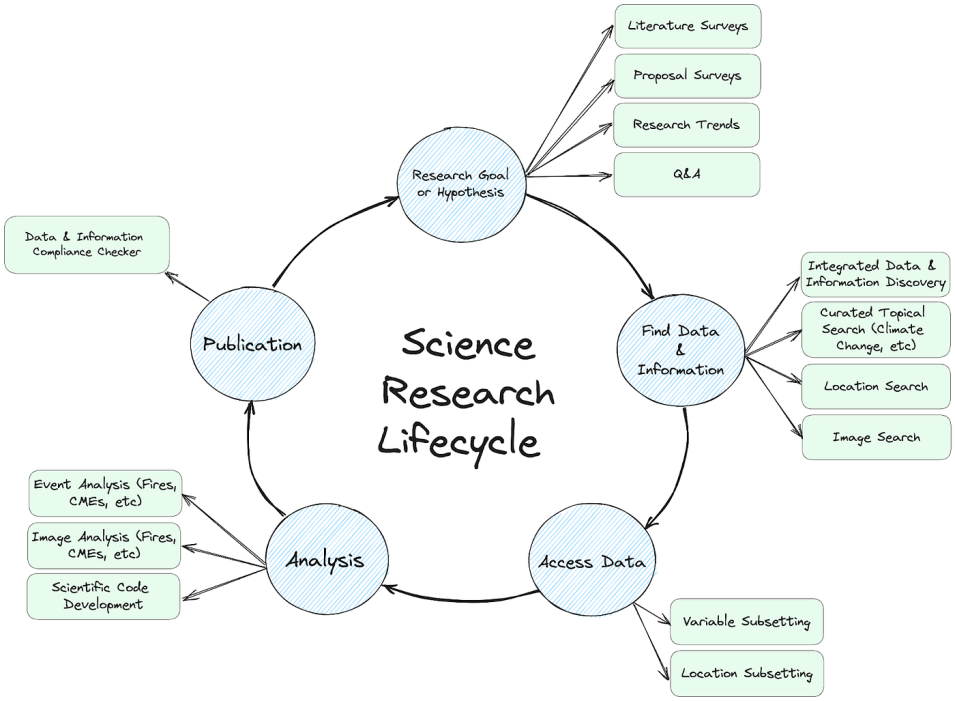

More specifically, ChatGPT and similar tools open new pathways for information discovery. Instead of formulating concise, specific, keyword-based queries to seek information, a user can simply have a conversation with ChatGPT in a more natural way. Results are often returned in context instead of isolated lists of datasets that are ranked by a relevance scheme that may or may not be useful or understandable to a user.

LLMs are promising but pose a number of challenges for science. Because many of these models are generative, they are prone to hallucinations, i.e. making things up in order to give the user an answer. This poses a problem for science, and especially for information providers, who have a responsibility to deliver reasonably trustworthy results to its users.

LLMs are built on the shoulders of existing content (not all of which can be properly labeled as ‘giants’) resulting in a number of challenges. Some persistent issues include propagation of bias, lack of attribution to the original content creators and gaps in LLM content coverage due to the passage of time or constraints of the original corpus on which it was trained.

Lastly, the development process and the data used for proprietary LLMs is opaque, making it difficult to understand the strengths, weaknesses and gaps in an LLM. These challenges push up against some of the central value systems of science including reproducibility, transparency, attribution and broader movements like open science. While these challenges are real, they should not stop the responsible adoption and use of technologies like LLMs.

We stand in a unique position to architect the future of science and its relationship to techniques like LLMs. We should not fall victim to fear but instead work together to define a future that responsibly includes LLMs.

Open Science and AI/LLM Principles

For data systems and programs, AI and LLMs have the potential to transform the three main aspects of open science: increasing accessibility to the scientific process & knowledge, making research & knowledge sharing more efficient and understanding scientific impact. To responsibly and ethically implement LLMs into data systems, principles need to be developed for the entire AI lifecycle.

While a number of legislative acts and AI principles already exist, we still lack guiding principles for designing, implementing and operating AI that were developed through the lens of open science. These open science principles must be applied to both developing new models from scratch and reusing existing models.

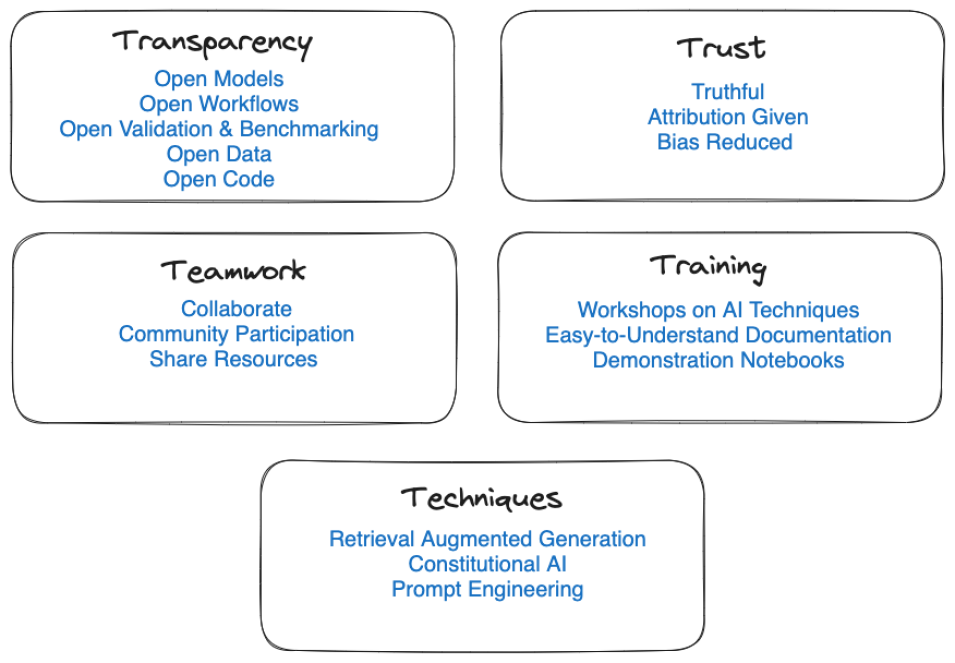

Given the lack of guiding principles, we propose that open science AI principles can be described as the 5 Ts: Transparency, Trust, Teamwork, Training and Techniques. Transparency emphasizes a commitment to making the models, workflows, data, code and validation techniques open. It also means openly disclosing when LLMs are used in applications. Trust focuses on building trust with the users of the LLM through providing factual answers, providing attribution for answers and reducing bias as much as possible.

Teamwork acknowledges that the community is critical in the responsible development and use of LLMs. This T emphasizes the value in collaborating across organizations, sharing resources and ensuring the scientific community can participate in every step of the process. Teamwork with the community can also guarantee that valid use cases are defined for LLMs.

Training acknowledges that users must be trained to interact responsibly with LLMs and to understand the strengths and weaknesses of those models. Training focuses on building AI skills like prompt engineering through workshops, documentation and notebooks.

Lastly, understanding and monitoring emerging techniques on how LLM applications will be built is critical to ensuring that trustworthiness is built into the design. Techniques like retrieval-augmented generation (RAG) or constitutional AI will be critical in ensuring LLMs are operated in a constrained and trustworthy manner. The rapidly changing nature of AI will require constant monitoring and assessment of emerging techniques that will dictate how we engineer reliable AI applications to support open science.