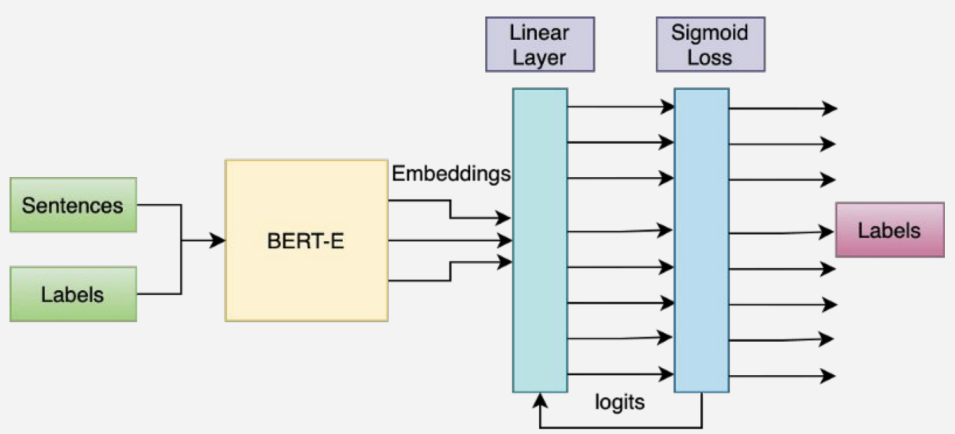

The machine learning team led by NASA's Interagency Implementation and Advanced Concepts Team (IMPACT) fine-tuned a pre-trained BERT (Bidirectional Encoder Representations from Transformers) model for science called Sci-BERT with an additional layer to create a domain-specific Earth science model called BERT-E.

IMPACT has used BERT-E to develop the GCMD Keyword Recommender, a tool that provides data curators with suggested GCMD keywords using predictions based on sentences and labels in existing dataset descriptions.