NASA’s Earth Observing System Data and Information System (EODIS) is responsible for one of the world’s largest collections of Earth observing data. Designing and enhancing the systems for providing these data effectively and efficiently to worldwide data users are two primary tasks of EOSDIS System Architect Dr. Christopher Lynnes. Working with his colleagues in NASA’s Earth Science Data and Information System (ESDIS) Project, Dr. Lynnes is preparing for a tremendous growth in the volume of data in the EOSDIS collection and for new ways data users will interact with and use these data.

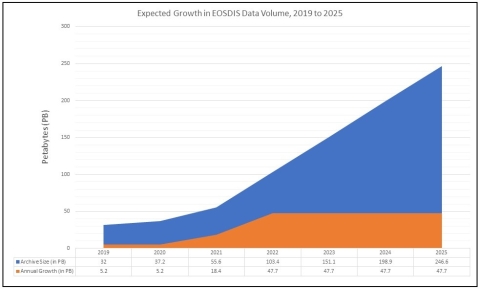

While the current volume of data in the EOSDIS collection is approximately 34 petabytes (PB), the launch of high-volume data missions such as the Surface Water and Ocean Topography (SWOT) mission and the NASA-Indian Space Research Organisation Synthetic Aperture Radar (NISAR) mission (both of which are scheduled for launch in 2021 or 2022) are expected to increase this data volume to approximately 247 PB by 2025, according to estimates by NASA’s Earth Science Data Systems (ESDS) Program (one petabyte of storage is equivalent to approximately 1.5 million CD-ROM discs). As Dr. Lynnes observes, this Big Data collection will require not only new ways for data users to use and interact with these data, but also new ways of thinking about what data are.

When we talk about data system architecture, what does this mean and what components are we talking about?

With respect to EOSDIS, and particularly the EOSDIS Distributed Active Archive Centers [DAACs], the term “data system” refers to all of the components that handle the ingest, archive, and distribution or other forms of access for Earth observation data in the EOSDIS collection.

The one thing that all of the DAACs have in common is this aspect of ingest, archive, and distribution. One of the values that EOSDIS adds to this is enterprise-wide subsystems that link all of these DAAC holdings together. These subsystems include the CMR [Common Metadata Repository], a metrics system that [the DAACs] all contribute to, and a search interface for the EOSDIS catalog [Earthdata Search]. We’re also working on a services framework for doing data transformations.

What I do as a system architect, and I do this in partnership with my fellow system architect Katie Baynes, is to make all of these different systems fit together in the most seamless, harmonious way that we can. Katie handles the ingest and archive aspects of our system (what we used to call the “push” side) and I handle mostly the data use side (which is what we used to call the “pull” side). What I try to do is provide the architectural vision for how everything should fit together.

You have been working with NASA Earth observing data and data systems since 1991. How have you seen the EOSDIS data system evolve?

You know, when EOSDIS started there was no World Wide Web. All of our data, or almost all of our data, tended to be stored either off-line on tapes or, in some of the more advanced systems, in what we called a “near-line” system. These would be robotic archives serving either optical disks or tapes. This was the technology almost completely for at least the first several years. It wasn’t until the early-2000s that we started putting most of our data on disk.

Our throughput to the end-users was much lower than it is today. When we were looking to support the SeaWiFS mission [Sea-viewing Wide Field of View Sensor, operational 1997 to 2010], we were concerned about how to support the distribution of 40 gigabytes per day to the end-user community. Back in 1994 that seemed like a real challenge!

It wasn’t really until we got to the early-2000s and were able to put all of our data onto disks that we finally broke through that user throughput barrier that we had until then. This was mostly a factor of disk prices coming down enough that we could afford to purchase enough disks on which we could put all of our instrument data.

Given the expected significant increase in the volume of data in the EOSDIS collection over the next several years, how are you and your team staying ahead of the curve in ensuring that these data will be efficiently and effectively available to worldwide data users?

It’s important to note that this is not the first time we’ve seen this sort of exponential growth. The beginning of NASA’s Earth Observing System [EOS] era [in the late-1990s and early-2000s] had this same surge of growth in the amount of data in the EOSDIS collection. The first couple of years of EOS were difficult as we were trying to get as much data out as we could. Eventually the technology caught up with us and it became much easier to deliver data. Looking at the present, we’re actually fortunate in that I think the technology to deal with these massive volumes of data we’re expecting over the next five to six years—cloud computing technology—actually might be a bit ahead of us.

One of our main goals, one of our main drivers, to handle the expected huge growth in data volume is to put the data, particularly the high-volume data, into the commercial cloud. [Editor’s note: Once operational, NISAR is expected to generate approximately 86 terabytes of data each day, while SWOT will generate 20 terabytes of data per day, according to NASA ESDS assessments. These data volumes are higher than any previous missions.]

The goal of putting EOSDIS data into the cloud is to give users a way to use the data at scale without them having to move the data. What we’re trying to accomplish with the Earthdata cloud migration is to support an analysis-in-place user experience that will enable users to do their work in the cloud—do complete dataset analyses if they want to—without them having to schlep the data all over the place just to get it down to their computer.

EOSDIS is not the only repository with Earth science data collections that are expected to grow significantly over the next few years. How are you working with other organizations, especially international agencies, to facilitate the access and use of these Big Data collections?

One of our main partnerships is with the European Space Agency (ESA). One of the activities I play a small part in is a joint activity we’re doing with ESA called the Multi-Mission Algorithm and Analysis Platform, or MAAP. This is an interesting project we’re doing to enable analysis and algorithm development for LIDAR and SAR data. Both NASA and ESA have substantial data holdings in these two areas and these holdings will increase significantly once NISAR is launched.

What we’re doing with MAAP is developing a cloud-based analysis platform where the users will be developing their data, algorithms, and processing techniques in the cloud right next to the data. We’re working hand-in-hand with ESA to develop a system that allows NASA and ESA to access each other’s data more or less seamlessly using a variety of interoperability protocols.

MAAP is able to re-use a number of existing EOSDIS components, such as the CMR, Earthdata Search, and Earthdata Login. By reusing all of these as part of the basic MAAP infrastructure, it gives MAAP more time to focus on the data analysis and the data processing aspects rather than the more prosaic data management.

What do you see as the next steps in the evolution of accessing and working with Big Data?

The next step is really the analysis-in-place user experience. This turns out to have a lot of interesting aspects that become more important to serving data to the users than when users are downloading their own data.

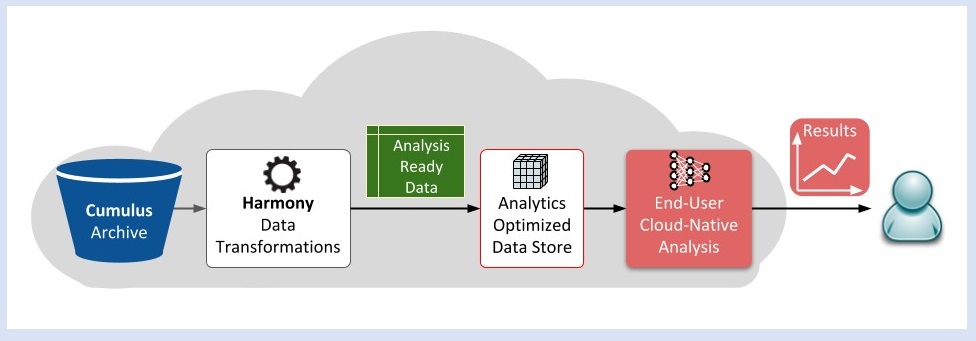

One of the issues is with the way that we store data—the data are not always in the form in which the user wants to analyze them. In other words, the data are not always analysis-ready [green box in above graphic]. Part of this has to do with the fact that, while there is some overlap, the requirements for storing data for data preservation purposes are not the same as the requirements for analyzing data.

At the same time, the kinds of analyses that users want to do differ from user to user. This makes it difficult to store data in a single analysis-ready form. One of the things we’re hoping to do with our new Harmony cloud data services system is to produce analysis-ready data on the fly for the user. The user will be able to customize the data via subsetting, regridding, and reprojection so that the data are ready to go right into their analysis.

Let’s talk a bit more about Harmony. What is this effort and what are its objectives?

Currently, each DAAC has its own individual archive and they tend to have specific data transformation services that are tied to their individual archive. As we move data into the cloud, all of the DAACs will essentially be sharing the same archive system, which is a virtual archive. This means that all EOSDIS data will be in the same cloud and all of the data will be managed using the same system software.

We realized early on that since all of the EOSDIS data will be in the same virtual archive, maybe we can have a single data transformation framework that would allow the individual DAACs to put in specific data transformation system components or, in some cases, even use data service components that work on multiple datasets across several DAACs.

Harmony is designed to be this framework that allows the DAACs to plug-in their services. It also will include some core data services for doing more common operations like basic variable subsetting.

What are some of the other challenges you and your team are dealing with involving analysis-ready data?

Downloading data to personal computers, taking data out of the cloud, defeats much of the purpose of putting data in a format designed for analysis-in-place.

For instance, in our legacy systems, when we customize data through subsetting, regridding, and reprojection and do these data manipulations on-premise, we basically stage these analysis-ready data in an output directory and send you an email saying you have, say, 72 hours to download these data. This set-up obviously doesn’t work if we want you to stay next to the data and analyze the data right there [without downloading the data]. We need to give you more time to work with the data in the system precisely because you’re not downloading the data. What we’re looking at are user experiences where we might leave these data out for, say, a month or so to let you do the necessary data analysis, reductions, whatever have you with these analysis-ready data so that we can keep these data in the cloud without you downloading them to your local computers.

A second thing we are looking at is to make it easy to manage the analysis-ready data produced for you in the cloud. Currently, we give you a list of all the URLs where you can go to download the individual data files you’re requesting. But, again, if you’re doing analysis-in-place rather than downloading the data, just giving you a list of URLs is not that useful. It would be more useful if we give you metadata for each one of those data files. We’re exploring a user experience that would give you kind of a lightweight mini-catalog of the analysis ready data we made for you so you could stay in the cloud and do the analysis right then and there.

Looking at the next two-to-five years, what enhancements to using EOSDIS data can data users expect?

Expect to see a more consistent approach to data transformation services. Also, once these data transformation services are running in the cloud, we should be able to run them faster than we can in the current system because we can scale-up as demand warrants.

Of course, users will also see the analysis-in-place experience coming to the fore. Doing analysis-in-place is very different from the way many users work with data today. One of the exciting things about how data analysis has been evolving, particularly over the past five years or so, is that there’s an enormous ecosystem of off-the-shelf tools that are being developed by several communities. A lot of these are being developed in the Python ecosystem and they’re tools like numpy, pandas, xarray, and dask. Some of these tools are actually optimized to allow for high performance analysis in the cloud. One of the key things that has to happen for users to be able to take full advantage of these tools is for them to start using the tools in these ecosystems for doing data analysis.

Also, some of the ways that you think about data will be different. You have to relinquish the idea of working with individual data values and analyzing them, say, as a Python for loop. The way that the new generation of these tools work is to think of the data as a holistic data object to which you apply a particular analysis operation and that you apply [this operation] to the entire data object. Tools like dask are able to automatically split up that analysis across multiple nodes by looking at the way that the data are chunked. This allows the user to get the benefit of this high-performance analysis without actually having to write all of the multi-threading or parallelization aspects into it.

This ecosystem of tools is being promoted particularly by the Pangeo group. Some of the work the Pangeo group is doing with NASA datasets is being funded under NASA's ACCESS [Advancing Collaborative Connections for Earth System Science] Program. [For more information about NASA's Pangeo ACCESS project, please see the Earthdata article EOSDIS Data in the Cloud: User Requirements.]

These two things sort of go together: leaving the data in the cloud instead of downloading them onto your system and thinking of individual data values as this large object of data with which you’re working. Both of these ideas force you to relinquish the need to hold individual data values on your individual computer. You just leave the data in the cloud and you treat it like a complete data object in its own right.

What are you most excited about with all the new developments and enhancements for using data that are on the horizon?

I really am excited about this analysis-in-place idea. I know it’s going to be new and I know people are going to have to adjust the way they think about analyzing data.

For a personal example, I did a talk at the 2019 American Geophysical Union [AGU] Fall Meeting. For this talk, I wanted to analyze global AIRS [Atmospheric Infrared Sounder] Level 2 data over a 20-year time-series. When I did my initial search, I found something like 1.5 million files. I said, I’m never going to get this done in time for AGU. So, I scoped it down to be just the Continental U.S., but even this was over 100,000 files. Just acquiring all of these data from the server and downloading them onto my personal computer took me a week-and-a-half! This really brought home to me how nice it would be to do all of my data analysis without moving any of those data anywhere; just leaving the data in place and analyzing them where they sit.

I think this whole concept of analyzing data in-place is going to allow scientists to process much more data than they would have in the past so they can look at entire time-series, they can look at the entire globe, they can meld multiple datasets together. The data management hassle of moving data from here to there and then managing the data on your limited disk area—those limitations will essentially disappear.

It sounds like we’re on the verge of another golden age of data use.

I’ve been with NASA a long time, and I think we will at least see the beginnings of this change before I leave the agency. It’s certainly not the sort of change that will happen overnight. We have a good team working on these issues. Not just in ESDIS, but also at various DAACs. We’ve also been doing good collaborations with external groups like Pangeo.

Once we get the data into the cloud and, more importantly, once we help people get used to the way they can maximize their use of these data through cloud computing, I think that a lot of the limitations that we struggle with today we will struggle to remember tomorrow.